This section contains the following topics:

Configure CA SiteMinder® Agent to Policy Server Connection Lifetime

Monitoring the Health of Hardware Load Balancing Configurations

CA SiteMinder® supports the use of hardware load balancers configured to expose multiple Policy Servers through one or more virtual IP addresses (VIPs). The hardware load balancer then dynamically distributes request load between all Policy Servers associated with that VIP. The following hardware load balancing configurations are supported:

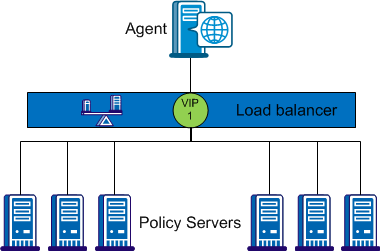

Single VIP, Multiple Policy Servers Per VIP

In the configuration shown in the previous diagram, the load balancer exposes multiple Policy Servers using a single VIP. This scenario presents a single point of failure if the load balancer handling the VIP fails.

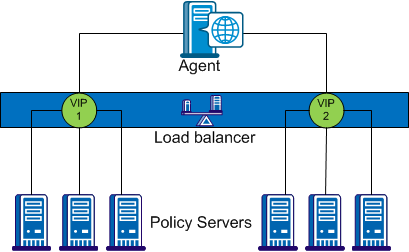

Multiple VIPs, Multiple Policy Servers Per VIP

In the configuration shown in the previous diagram, groups of Policy Servers are exposed as separate VIPs by one or more load balancers. If multiple load balancers are used, this amounts to failover between load balancers, thus eliminating a single point of failure. However, all major hardware load balancer vendors handle failover between multiple similar load balancers internally such that only a single VIP is required. If you are using redundant load balancers from the same vendor, you can therefore configure Agent to Policy Server communication with a single VIP and still have robust load balancing and failover.

Note: If you are using a hardware load balancer to expose Policy Servers as multiple virtual IP addresses (VIPs), we recommend that you configure those VIPs in a failover configuration. Round robin load balancing is redundant as the hardware load balancer performs the same function more efficiently.

Once established, the connection between an Agent and a Policy Server is maintained for the duration of the session. Therefore, a hardware load balancer only handles the initial connection request. All further traffic on the same connection goes to the same Policy Server until that connection is terminated and new Agent connections established.

By default, the Policy Server connection lifetime is 360 minutes—typically too long to be effective using a hardware load balancer. To help ensure that all Agent connections are renewed frequently for effective load balancing, configure the maximum Agent connection lifetime on the Policy Server.

To configure the maximum connection lifetime for a Policy Server, set the following parameter:

Specifies the maximum Agent connection lifetime in minutes.

Default: 0. Sets no specific value; only the SiteMinder default connection lifetime (360 minutes) limit is enforced.

Limits: 0 - 360

Example: 15

Note: If you do not have write access to the CA SiteMinder® binary files (XPS.dll, libXPS.so, libXPS.sl), an Administrator must grant you permission to use the related XPS command line tools using the Administrative UI or the XPSSecurity tool.

The AgentConnectionMaxLifetime parameter is dynamic; you can change its value without restarting the Policy Server

To configure the maximum Agent connection lifetime for hardware load balancers

xpsconfig

The tool starts and displays the name of the log file for this session, and a menu of choices opens.

sm

A list of options appears.

The AgentConnectionMaxLifetime parameter menu appears.

The tool prompts you whether to apply the change locally or globally.

30

The AgentConnectionMaxLifetime parameter menu reappears, showing the new value. If a local override value is set, both the global and local values are shown.

Your changes are saved and the command prompt appears.

Different hardware load balancers provides various methods of determining the health of the hardware and applications that they are serving. This section describes general recommendations rather than vendor-specific cases.

Complicating the issue of server health determination is that SiteMinder health and load may not be the only consideration for the load balancer. For example, a relatively unburdened Policy Server can be running on a system otherwise burdened by another process. The load balancer should therefore also take into account the state of the server itself (CPU, Memory Usage and Disk Activity).

Hardware load balancers can use active monitors to poll the hardware or application for status information. Each major vendor supports various active monitors. This topic describes several of the most common monitors and their suitability for monitoring the Policy Server.

The TCP Half Open monitor performs a partial TCP/IP handshake with the Policy Server. The monitor sends a SYN packet to the Policy Server. If the Policy Server is up, it sends a SYN-ACK back to the monitor to indicate that it is healthy.

An SNMP monitor can query the SiteMinder MIB to determine the health of the Policy Server. A sophisticated implementation can query values in the MIB to determine queue depth, socket count, threads in-use, and threads available, and so on. SNMP monitoring is therefore the most suitable method for getting an in-depth sense of Policy Server health.

To enable SNMP monitoring, configure the SiteMinder OneView Monitor and SNMP Agent on each Policy Server. For more information, refer to Using the OneView Monitor and Monitoring CA SiteMinder® Using SNMP.

Note: Not all hardware load balancers provide out-of-the-box SNMP monitoring.

The ICMP health monitor pings the ICMP port of almost any networked hardware to see if it is online. Because the ICMP monitor does little to prove that the Policy Server is healthy, it is not recommended for monitoring Policy Server health.

The TCP Open Monitor performs a full TCP/IP handshake with a networked application. The monitor sends well-known text to a networked application; the application must then respond to indicate that it is up. Because the Policy Server uses end-to-end encryption of TCP/IP connections and a proprietary messaging protocol, TCP Open Monitoring is unsuitable for monitoring Policy Server health.

In-band health monitors run on the hardware load balancer and analyze the traffic that flows through them. They are lower impact than active monitors and impose very little overhead on the load balancer.

In-band monitors can be configured to detect a particular failure rate before failing over. In-band monitors on some load balancers can detect issues with an application and specify an active monitor that will determine when the issue has been resolved and the server is available once again.

|

Copyright © 2013 CA.

All rights reserved.

|

|