Applies to CA6000 and CA6300 appliances

Problem: On restart of the appliance, a kernel panic preceded by an XFS code call stack similar to the following is displayed:

RIP [<ffffffff883cf607>] :xfs:xfs_error_report+0xf/0x58 RSP <ffff81028c817c28> CR2: 0000000000000118 <0> Kernel panic - not syncing - Fatal exception

Resolution: We recommend recovering a corrupted XFS file system as soon as possible. Typically, XFS file system corruption causes a Linux kernel panic and system halt similar to the above.

The CA Multi-Port Monitor appliance uses the high performance Linux XFS file system on two partitions:

/dev/sdb1 mounted on /nqxfs hosts the Vertica metrics database.

/dev/sdb2 mounted on /data hosts the CA Multi-Port Monitor packet capture storage.

/dev/sda4 mounted on /nqxfs hosts the Vertica metrics database.

/dev/sdb1 mounted on /data hosts the CA Multi-Port Monitor packet capture storage.

XFS file system corruption typically occurs when the appliance experiences a power outage or hardware hang.

The Linux kernel panic is mostly likely to occur on the /nqxfs partition shortly after restarting the appliance when the Vertica metrics database starts. In the following example, the terminal display shows an XFS call stack and kernel panic. Note that the affected partition may not be displayed, but you can safely run xfs_repair on both XFS partitions (/nqxfs and /data) to ensure all XFS file system corruption is repaired:

Repair a XFS file system to resolve corruption on that file system. If the corruption occurred on the /nqfxs partition, which is where the Vertica metrics database resides, recreate the Vertica metrics database.

Applies to CA6000 and CA6300 appliances

Repair a damaged or corrupt XFS file system using the xfs_repair command on the affected partition. After you repair XFS file system corruption on the:

Estimated time to complete XFS repair: 30-60 minutes

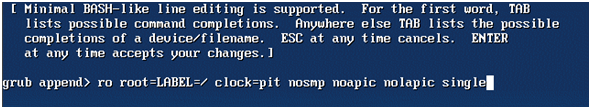

Follow these steps:

Note: In single user mode, the appliance can only be accessed from the terminal display.

unmount it and execute xfs_repair for its block device:

umount /nqxfs xfs_repair /dev/sdb1

unmount it and execute xfs_repair for its block device:

umount /data xfs_repair /dev/sdb2

unmount it and execute xfs_repair for its block device:

umount /nqxfs xfs_repair /dev/sda4

unmount it and execute xfs_repair for its block device:

umount /data xfs_repair /dev/sdb1

Phase 1 - find and verify superblock...

Phase 2 - zero log...

- scan file system freespace and inode maps...

- found root inode chunk

Phase 3 - for each AG...

- scan and clear agi unlinked lists...

- process known inodes and perform inode discovery...

- agno = 0

- agno = 1

...

- process newly discovered inodes...

Phase 4 - check for duplicate blocks...

- setting up duplicate extent list...

- clear lost+found (if it exists) ...

- clearing existing “lost+found” inode

- deleting existing “lost+found” entry

- check for inodes claiming duplicate blocks...

- agno = 0

imap claims in-use inode 242000 is free, correcting imap

- agno = 1

- agno = 2

...

Phase 5 - rebuild AG headers and trees...

- reset superblock counters...

Phase 6 - check inode connectivity...

- ensuring existence of lost+found directory

- traversing file system starting at / ...

- traversal finished ...

- traversing all unattached subtrees ...

- traversals finished ...

- moving disconnected inodes to lost+found ...

disconnected inode 242000, moving to lost+found

Phase 7 - verify and correct link counts...

Done

When restarting the appliance, the partition should no longer trigger a Linux kernel panic.

|

Copyright © 2015 CA Technologies.

All rights reserved.

|

|